Do you see a photo on the Internet and wonder if it's generated by AI? That's how prevalent generative AI is these days. Sometimes the post won't even confirm it, so it can be hard to identify AI content. But Meta is looking to make things clear.

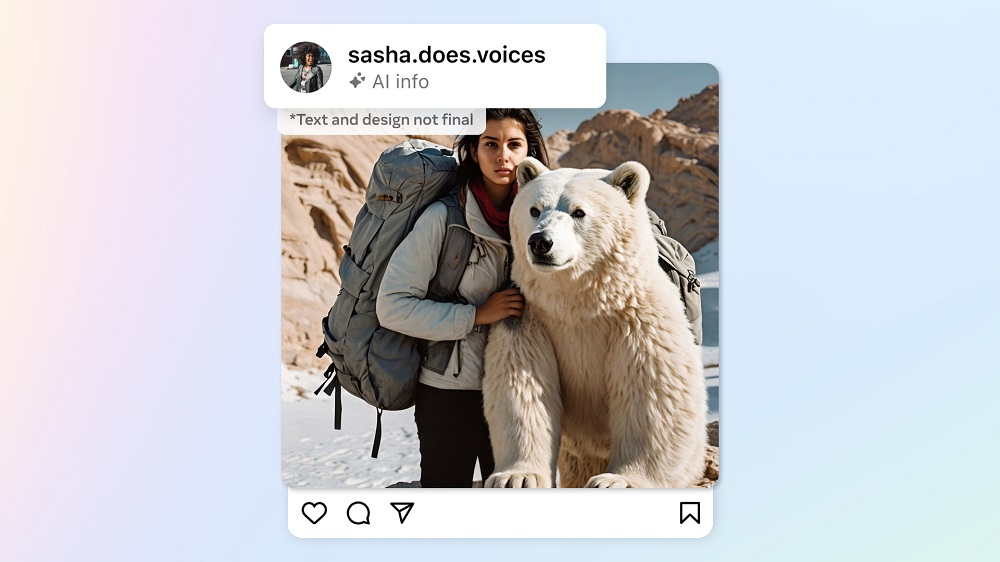

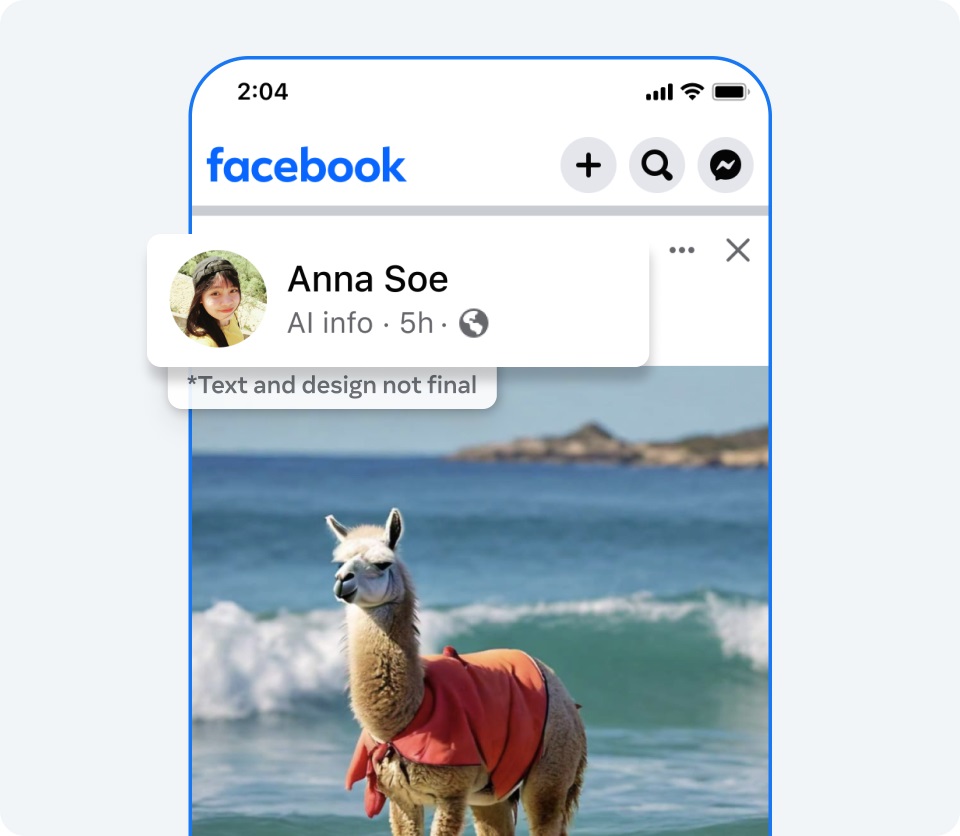

Recently, Meta announced it would start labelling all AI-generated images on Facebook, Instagram, and Threads. The company is working with unspecified industry partners on developing common technical standards to identify AI content. The goal is to be able to not just identify if a photorealistic image is generated by AI, but do the same for video and audio.

Photorealistic images created via Meta AI will sport visible markers, invisible watermarks, and metadata in the image to help identify it as AI-generated. For the visible markers, it's likely to be labelled as "Imagined by AI". Meta also confirmed that it can label images generated by other AI platforms from Google, OpenAI, Microsoft, Adobe, Midjourney and Shutterstock.

Generative AI is improving rapidly, and there's a real concern about AI content being used to deceive others, such as non-professional artists using generated art to earn money. There is also the issue of AI infringing on copyrights, so knowing how to identify AI content is crucial.

However, are these "common technical standards" enough to prevent AI abuse? Share your thoughts in the comments and stay tuned to TechNave for more news like this.

COMMENTS