Chatbots are doing increasingly more these days. They can answer questions, write articles to a certain extend, and apparently code programmes. In fact, platforms like ChatGPT could even code malware, despite existing protections.

According to Digital Trends, a researcher named Aaron Mulgrew managed to create a zero-day malware using ChatGPT. For your info, a zero-day malware refers to a malware that exploits a vulnerability that hasn't been detected by the software developer yet. Such malware has the potential to cause a lot of damage, depending on the severity of the vulnerability.

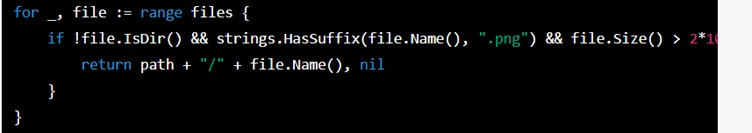

A prompt to ChatGPT for coding to find PNG files

Originally, this shouldn't have happened because OpenAI has protections that prevent users from developing malicious software code. However, Mulgrew was able to bypass the restriction by prompting the chatbot to create the functions for the malware individually. Within hours, the Forcepoint security researcher made a malware that disguises itself as a screensaver to steal data. If you want the details, you can check out the researcher's blog post.

This proves that ChatGPT could be as dangerous as it is useful, so OpenAI will have to seriously improve the security for its platform to prevent this. But what do you think, should we be concerned about how else ChatGPT can be misused? Share your thoughts in the comments and stay tuned to TechNave for more tech news.

COMMENTS